When the dust started settling on the 2004 presidential election, journalists were doing our usual postmortems about our coverage and influence (or lack thereof) on the election. For the first time, the word “blogger” was prominent, but not for entirely positive reasons.

On Election Day, exit-poll results were widely available on the Internet. People with access to the numbers—probably inside journalism organizations—leaked the numbers to bloggers, who promptly posted the data for all to see. The results were dramatic, in more ways than one. John Kerry seemed to be winning, and his supporters became cautiously euphoric. Stock markets reacted badly, as some traders began selling certain industries in anticipation of a Kerry administration’s likely policies. But as we’ve learned, the exit polls—which ran counter to just about every pre-election survey showing George W. Bush in the lead—were wrong.

In this emerging era of grassroots journalism, things are getting messy. Or, more accurately, messier. And the election polling uproar is just one more piece of evidence.

As I observed on these pages several years ago, the 20th century model of centralized newsgathering and distribution is being augmented (and in some cases will be replaced) by an emergent phenomenon of increasingly ubiquitous and interwoven networks. Technology has collided squarely with journalism, giving people at the edges of those networks low-cost and easy-to-use tools to create their own media, and the data networks are giving them global reach.

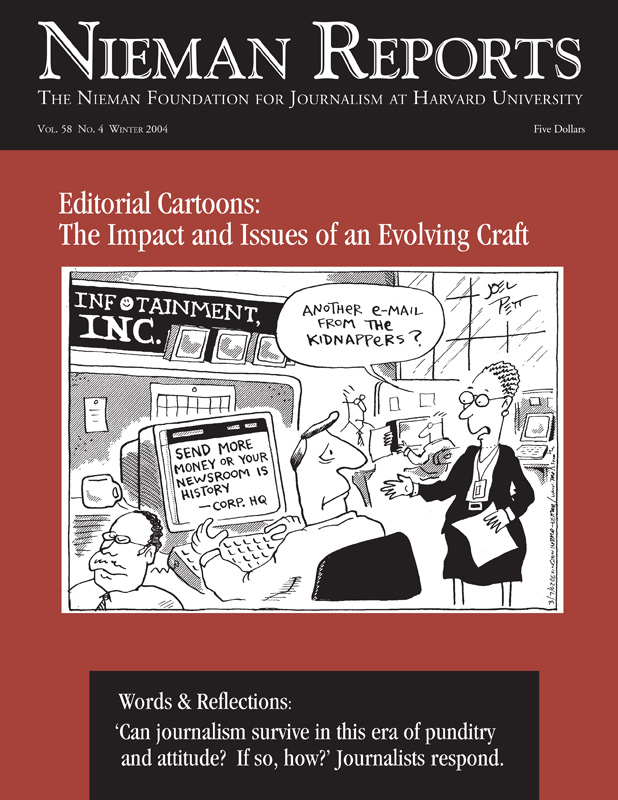

Meanwhile, our business model is under attack as never before, by people using the same technologies to carve away our revenues. Think of eBay as the largest classified advertising site on the planet, and you get the idea.

My focus here, however, is on the messiness factor. It will be a growing source of discomfort among journalists. Our gatekeeping role is under challenge, along with our credibility. And as we saw in the exit-poll debacle, the messiness will have serious consequences while we sort it out, assuming we can. I believe we can, but it will take a fair bit of time.

The core of the issue is in fragmentation. The news audience seems to be going its own way. Certainly there’s some retreat to quality—to sources of information we learn to trust in online searching—not just surfing to random “news” sites that turn out to give false information. But there’s equally a hunt for better information than we’re getting from mainstream media. It is not an accident that The Guardian’s Web site saw an enormous surge in traffic before the Iraq War began. The visitors were, in large part, Americans who knew they weren’t getting anything like the full story from newspapers and broadcasters that seemed to become little more than propaganda arms of the Bush administration after the September 11th attacks.

Now contemplate The Guardian times ten thousand, or a million. No, most of those other alternative sources won’t attract many readers, but collectively they contribute to the audience fragmentation. Readers—and viewers and listeners to the increasingly sophisticated online media being offered by the grassroots—are learning, perhaps too slowly, to find trusted sources but also to exercise caution. I can’t emphasize enough the need for reader caution, because I don’t expect the grassroots journalists to exercise much restraint. I wish bloggers were more responsible, but I value their First Amendment rights as much as anyone else’s.

Some help might be coming from Silicon Valley, where I live and work. Technology helped create this messiness. It might help solve it. The tools of media creation and distribution are more powerful and ubiquitous. Now we need tools to better manage the flood of what results. Early entrants in this field are promising. A new file format called “Really Simple Syndication,” or RSS, lets software parse many different Web sites and aggregate them into one collection of news and other kinds of information. News people who don’t know what RSS is should learn. Yesterday.

Specialized search tools, such as Technorati and Feedster, are emerging to help us gather and sort good material from bad. They’re still fairly crude in many ways, but they are improving quickly and help point to more useful systems. Reputation systems, where we can easily learn what people we trust consider trustworthy themselves, are on the way.

This is not going to be a smooth transition. But I still believe, in an era where so much is so centralized, that more voices are ultimately better than fewer. We have to sort it out. It will be messy and worth the trouble.

Dan Gillmor is technology columnist for the San Jose Mercury News and author of “We the Media: Grassroots Journalism by the People, for the People,” published in 2004.